☞ [Demo Video - Trimmed for UK]

Additional Individual Examples with Isolated Stems

| Music | Speech | SFX | |

| Jimmy Fallon | Link | Link | Link |

| FlexTape Commercial | Link | Link | Link |

| Spiderman | Link | Link | Link |

| Gordon Ramsey (Unavailable in UK) | Link | Link | Link |

Resources page for the Divide and Remaster (DnR) dataset introduced as part of the Cocktail Fork Problem paper

The DnR dataset is built from three, well-established, audio datasets; Librispeech, Free Music Archive (FMA), and Freesound Dataset 50k (FSD50K). We offer our dataset in both 16kHz and 44.1kHz sampling-rate along time-stamped annotations for each of the classes (genre for ‘music’, audio-tags for ‘sound-effects’, and transcription for ‘speech’). We provide below more informations on how the dataset is built and what it’s consists of exactly. We also go over the process of building the dataset from scratch for the cases it needs to.

- Official Codebase and Experiment Reproduction

- Dataset Overview

- Get the DnR Dataset

- Dataset Analysis

- Citation

- Contact and Support

Official Codebase and Experiment Reproduction

To access the official paper codebase, which includes a pre-trained checkpoint, the proposed MRX model, as well as the full training and evaluation pipeline, please visite MERLs official CFP GitHub page.

Dataset Overview

The Divide and Remix (DnR) dataset is a dataset aiming at providing research support for a relatively unexplored case of source separation with mixtures involving music, speech, and sound-effects (SFX) as their sources. The dataset is built from three, well-established, datasets. Consequently if one wants to build DnR from scratch, the aforementioned datasets will have to be downloaded first. Alternatively, DnR is also available on Zenodo

Get the DnR Dataset

In order to obtain DnR, several options are available depending on the task at hand:

Download

-

DnR-HQ (44.1kHz) is available on Zenodo at the following. Note: We recently addressed several issues found from the original dataset (in regards to annotations, mainly). An updated version has now been uploaded to Zenodo. Make sure you use it instead. More info on at the Zenodo link!

-

Alternatively, if DnR-16kHz is needed, please first download DnR-HQ locally. You can then downsample the dataset (either in-place or not) by cloning the dnr-utils repository and running:

python dnr_utils.py --task=downsample --inplace=True

Building DnR From Scratch

In the section, we go over the DnR building process. Since DnR is directly drawn from FSD50K, LibriSpeech/LibriVox, and FMA, we first need to download these datasets. Please head to the following links for more details on how to get them:

Datasets Downloads

FSD50K

FMA-Medium Set

LibriSpeech/LibriVox

Please note that for FMA, the medium set only is required. In addition to the audio files, the metadata should also be downloaded. For LibriSpeech DnR uses dev-clean, test-clean, and train-clean-100. DnR will use the folder structure as well as metadata from LibriSpeech, but ultimately will build the LibriSpeech-HQ dataset off the original LibriVox mp3s, which is why we need them both for building DnR.

After download, all four datasets are expected to be found in the same root directory. Our root tree may look something like that. As the standardization script will look for specific file name, please make sure that all directory names conform to the ones described below:

root

├── fma-medium

│ ├── fma_metadata

│ │ ├── genres.csv

│ │ └── tracks.csv

│ ├── 008

│ ├── 008

│ ├── 009

│ └── 010

│ └── ...

├── fsd50k

│ ├── FSD50K.dev_audio

│ ├── FSD50K.eval_audio

│ └── FSD50K.ground_truth

│ │ ├── dev.csv

│ │ ├── eval.csv

│ │ └── vocabulary.csv

├── librispeech

│ ├── dev-clean

│ ├── test-clean

│ └── train-clean-100

└── librivox

├── 14

├── 16

└── 17

└── ...

Datasets Standardization

Once all four datasets are downloaded, some standardization work needs to be taken care of. The standardization process can be be executed by running standardization.py, which can be found in the dnr-utils repository. Prior to running the script you may want to install all the necessary dependencies included as part of the requirement.txt with pip install -r requirements.txt.

Note: pydub uses ffmpeg under its hood, a system install of fmmpeg is thus required. Please see pydub’s install instructions for more information.

The standardization command may look something like:

python standardization.py --fsd50k-path=./FSD50K --fma-path=./FMA --librivox-path=./LibriVox --librispeech-path=./LibiSpeech --dest-dir=./dest --validate-audio=True

DnR Dataset Compilation

Once the three resulting datasets are standardized, we are ready to finally compile DnR. At this point you should already have cloned the dnr-utils repository, which contains two key files:

config.pycontains some configuration entries needed by the main script builder. You want to set all the appropriate paths pointing to your local datasets and ground truth files in there.- The compilation for a given set (here,

train,val, andeval) can be executed withcompile_dataset.py, for example by running the following commands for each set:python compile_dataset.py with cfg.trainpython compile_dataset.py with cfg.valpython compile_dataset.py with cfg.eval

Known Issues

Some known bugs and issues that we’re aware. if not listed below, feel free to open a new issue here:

-

If building from scratch,

pydubwill fail at reading 15 mp3 files from the FMA medium-set and will return the following error:mp3 @ 0x559b8b084880] Failed to read frame size: Could not seek to 1026. -

If building DnR from scratch, the script may return the following error, coming from

pyloudnorm:Audio must be have length greater than the block size. That’s because some audio segment, especially SFX events, may be shorter than 0.2 seconds, which is the minimum sample length (window) required bypyloudnormfor normalizing the audio. We just ignore these segments.

Dataset Analysis

The file length distributions of the four DnR building blocks are as follows. Note that FMA files occupy a single bin since all files are 30-sec length:

We also measure the amount of inter-classes overlap, here for the whole DnR training set. Through the building process, we ensure to cover all overlapping scenarios, while emphasizing on the “all-classes” one (accounting for 50% of the data):

The average length per class files are also provided down below:

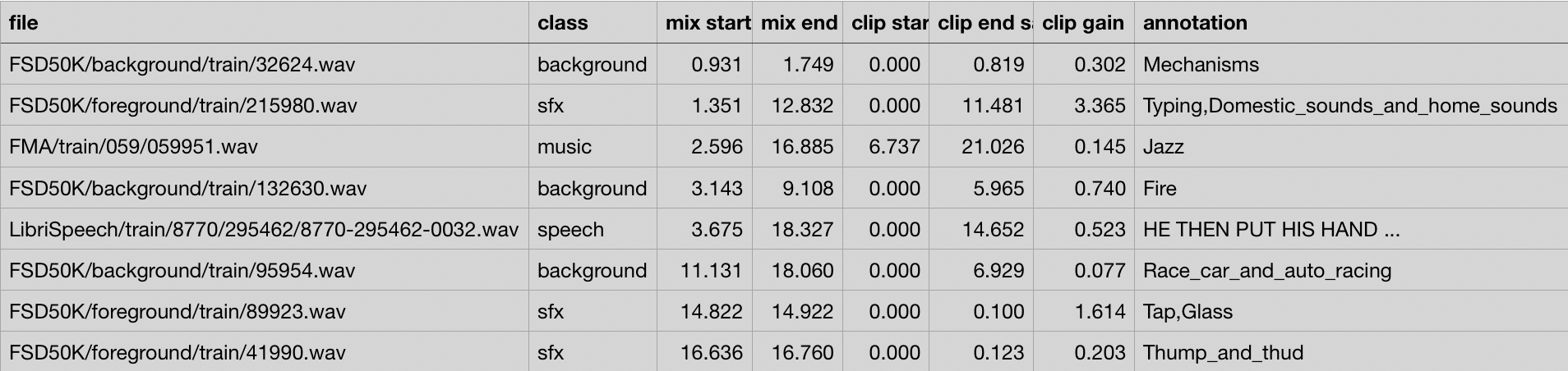

Annotations for each class are provided with time-stamps:

Experimental Validation

| 16 kHz | 44.1 kHz | |||||

| Music | Speech | SFX | Music | Speech | SFX | |

| |

-6.61 | 1.04 | -5.19 | -6.70 | 0.94 | -4.94 |

| |

11.99 | 18.02 | 13.60 | 11.99 | 18.03 | 13.83 |

| |

2.38 | 10.98 | 3.99 | 2.06 | 10.72 | 4.01 |

| |

2.74 | 11.01 | 4.02 | 2.28 | 10.66 | 4.22 |

| |

3.73 | 12.32 | 4.44 | 3.45 | 12.02 | 4.09 |

| |

3.37 | 11.77 | 4.73 | 3.5 | 11.79 | 5.36 |

| |

3.83 | 12.04 | 4.92 | 3.72 | 12.06 | 5.33 |

| |

3.51 | 11.4 | 4.49 | 3.52 | 11.02 | 4.86 |

| |

3.06 | 10.34 | 3.93 | 3.51 | 10.95 | 4.81 |

| |

3.84 | 11.99 | 4.99 | 3.87 | 12.07 | 5.46 |

| |

3.76 | 12.78 | 4.32 | 3.66 | 12.58 | 5.05 |

| |

4.39 | 12.56 | 5.4 | 4.42 | 12.65 | 5.87 |

Citation

If you use DnR please cite our paper in which we introduce the dataset as part of the Cocktail Fork Problem:

@InProceedings{Petermann2022CFP,

author = {Petermann, Darius and Wichern, Gordon and Wang, Zhong-Qiu and {Le Roux}, Jonathan},

title = {The Cocktail Fork Problem: Three-Stem Audio Separation for Real-World Soundtracks},

booktitle = {2022 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP)},

year = 2022,

month = may

}

Contact and Support

Have an issue, concern, or question about DnR or its utility tools ? If so, please open an issue here

For any other inquiries, feel free to shoot an email at: firstname.lastname@gmail.com, my name is Darius Petermann ;)